Step-by-step Guide to Monitor Riak Using Telegraf and MetricFire

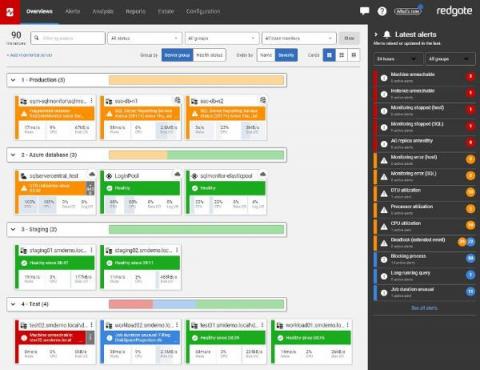

Monitoring your databases is essential for maintaining performance, reliability, security, and compliance of your infrastructure. It allows you to stay ahead of potential issues, optimize resource utilization, and ensure a smooth and efficient operation of your database system. Effective monitoring of Riak involves collecting, analyzing, and acting on a variety of metrics and logs.