Webinar Recap: Taming Data Complexity at Scale

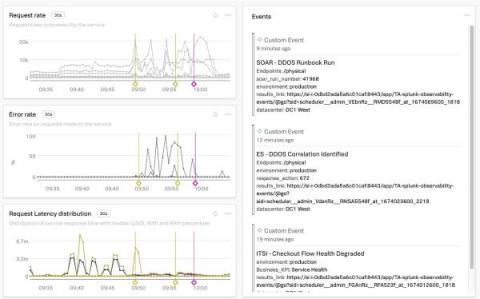

As a Senior Product Manager at Mezmo, I understand the challenges businesses face in managing data complexity and the higher costs that come with it. The explosion of data in the digital age has made it difficult for IT operations teams to control this data and deliver it across teams to serve a range of use cases, from troubleshooting issues in development to responding quickly to security threats and beyond.