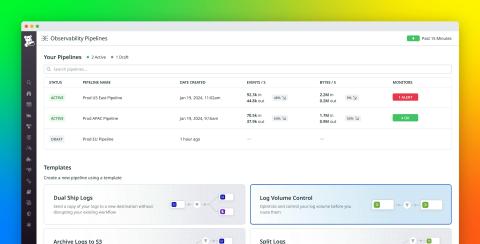

Control your log volumes with Datadog Observability Pipelines

Modern organizations face a challenge in handling the massive volumes of log data—often scaling to terabytes—that they generate across their environments every day. Teams rely on this data to help them identify, diagnose, and resolve issues more quickly, but how and where should they store logs to best suit this purpose? For many organizations, the immediate answer is to consolidate all logs remotely in higher-cost indexed storage to ready them for searching and analysis.